Why Evaluate Education and Training?

Within health and aged care, education and training are only effective if new knowledge and skills move from the learning environment to the interprofessional practice environment. Achieving this is a complex task for healthcare clinicians and staff because the desired outcome (improved care) often requires sustained behavioural change.

What is a Post-training Assessment?

A post-training assessment is a basic evaluation tool administered after the completion of a training course or any other work-related education. The primary aim of a post-training assessment is to gauge the efficacy of the learning materials, learning experience and educators' teaching process (WIlliam 2011). Additionally, by asking questions that directly relate to the learning objectives and goals of the training, post-training assessments offer insights into the participant's training outcomes, including how they will apply what they’ve learned to practice.

The purpose of post-training assessments is not just to collect rudimentary feedback. When designed and administered appropriately, they can provide valuable insights, such as whether the education is effective and whether it goes on to achieve the desired results.

What Data Should a Post-Training Assessment Collect?

The New World Kirkpatrick model is a simple, validated evaluation model that L&D teams can use to determine what data their post-training assessment should collect. This model is appealing because it offers different options for evaluating activities that benefit the activity type. It contains a four-level evaluation process, each step building on the previous. The New World Kirkpatrick Model is used extensively to evaluate the effectiveness of education as it provides a framework for obtaining, organising and analysing data from learning activities. Many, many other models exist.

TL+Image+6235C.png)

Level 1: Reaction

This level measures how engaging and relevant staff find the training to their job roles. It captures attendance and engagement and allows learners to evaluate how closely the training aligns with their real-life work experiences.

Level 2: Learning

Here, the focus is on assessing whether staff have gained the intended knowledge, skills, attitudes, confidence, and commitment from the training.

While achieving levels 1 and 2 of the Kirkpatrick Model is relatively straightforward, meeting tighter regulatory standards such as the strengthened Aged Care Quality Standards requires adopting levels 3 and 4.

Level 3: Behaviour

This level assesses the extent to which staff apply what they’ve learned in training when performing their roles.

Level 4: Results

According to Kirkpatrick and Kirkpatrick (2020), the ultimate goal of training is to achieve specific outcomes that stem from the training, support, and accountability measures. Many providers struggle to demonstrate that their training packages improve the quality of care, a gap that must be addressed as audit standards increasingly require this type of evidence.

Gathering this type of data necessitates more time devoted to post-training reflective learning and discussions around implementing best practices, as opposed to a single post-training assessment.

What Should a Post-Training Assessment Evaluate?

The type of education or training being delivered and the specific desired learning outcome of that activity will influence the data collected in a post-training assessment. The depth, length and time it takes to administer a post-training assessment should align with the depth and duration of the actual learning activity.

Using the New World Kirkpatrick Model of Evaluation, here are examples of data that can be collected across each level.

Examples - Level 1: Reaction

Post-training assessments that focus on Level 1: Reaction focus on gathering information about the learner’s reaction or satisfaction with the activity. For example:

- A survey with short questions on attitude toward the material, subject matter expertise and overall satisfaction with the training, given immediately after training or education.

Examples - Level 2: Learning

Level 2 data collected in a post-training assessment aims to evaluate the degree to which learners acquire the intended knowledge, skills, attitude and confidence. It also assesses the degree to which learners perceive they can apply the knowledge post-learning. It may be collected during or shortly after learning. Includes:

- Multiple choice or true or false quiz questions to assess acquisition of new knowledge or reinforcement of existing knowledge.

- Longer-form short answer questions to demonstrate attitude towards learning and confidence.

- Responses to a case scenario or a problem-based exercise to demonstrate subjective skill acquisition.

- Verification of skill acquisition is most accurately assessed by return skill demonstration.

Examples - Level 3: Behaviour

This level of evaluation data aims to forge learning and behaviour together by measuring the transfer of knowledge, skills and attitudes from the learning to the practice setting. Standard methods for assessing and collecting level 3 data include self-reported behaviour change via survey and direct observation (if feasible). These are within the scope of a post-training assessment, but this type of evaluation would occur three to six months post-learning. The assessment would include reflection on questions such as:

- Could they apply the learning?

- Were there barriers to implementation?

- Could they overcome barriers?

- Are they still doing it? Was change sustained?

Examples - Level 4: Results

Level 4 data measures the ultimate goal of training, not just the training outcomes but the impact of those outcomes on an organisation. Post-training assessments delivered to participants may assist in gathering this data.

Other sources of data are also required to calculate the return on investment (ROI) of a particular training activity or the impact of education on staff retention, or other cost savings such as a reduction in falls or pressure injuries. This data includes:

- National mandatory quality indicator data is used to correlate cost savings with educational and quality initiatives.

- Internal data such as complaints, feedback, staff satisfaction and average tenure of staff.

Administering Post-training Assessments

Whichever level of data you are aiming to collect, here are some common formats and modes of delivery of post-training assessments:

Showcasing Your Evaluation Data

- Self-assessment or audit preparation tools: Specifically in aged care, providers must complete a pre-audit assessment tool when assessed against the strengthened Aged Care Quality Standards. This tool, which may also act as a provider’s self-assessment or plan for continuous improvement (PCI), will benefit from training data and learning records to demonstrate how a provider met a particular outcome.

- Identification of unmet learning gaps: By assessing the unmet learning objectives of the training delivered, these assessments can highlight areas where learners may need additional education or practice, supporting future activity planning.

- Continuous improvement: Post-training assessments provide a mechanism for gathering data that can inform future training initiatives. This cycle of continuous improvement is essential for maintaining a high standard of education.

- Performance reviews: Evaluation data from training is useful in performance appraisals for L&D professionals, as it can demonstrate learning goals attained across the review period, the impact on an organisation, and the value and benefits of investing in education and training.

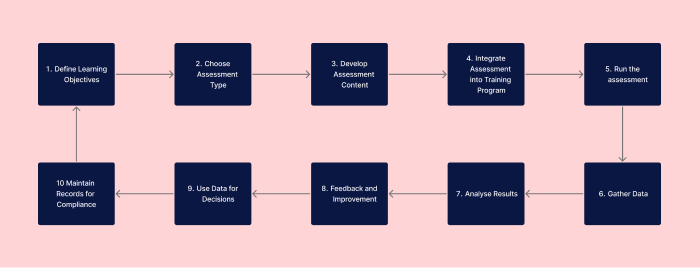

Steps to Perform Post-Training Assessments

Below are the steps to implement post-training assessments in your workplace.

- Define Assessment Objectives:

Clarify what you want to achieve with the assessment. Your objectives should align with the learning goals of the training program and the larger needs of the organisation. - Choose the Right Assessment Type:

Different training goals may require different types of assessments, such as multiple-choice tests, simulations, or hands-on assessments for practical skills. Choose the tool that best matches the skills or knowledge you are trying to evaluate. - Develop Assessment Content:

Once you've chosen a tool, the next step is to create the actual questions or challenges that will make up the assessment. These should be directly related to the training materials and designed to be both challenging and fair. - Integrate into Training Program

Insert your assessments at the appropriate points in your training sessions or online training modules. This could be immediately following each section or at the end of the training course. - Run the Assessment

Administer it to all training participants. This could be in a classroom setting, online through a Learning Management System, or even in a real-world job environment, depending on the nature of the training. - Gather Data

Once the assessment is part of your training program, collect the data from these evaluations. This might involve automated scoring through a learning management system or manual grading for more qualitative assessments. - Analyse Results

Use analytics to review the data. This could involve simple percentage-based pass rates or more complicated statistical analyses for larger training programs. - Feedback and Improvement

Utilise the data to provide feedback to training instructors and trainees. Discuss areas of improvement and what is working well. - Make Data-Driven Decisions

Based on the data gathered, make decisions to refine and improve training. This may include revising course materials, updating learning objectives, or even rethinking your entire approach to training. - Maintain Records for Compliance

Finally, ensure that all assessment results are securely stored and easily accessible to comply with Australian healthcare standards and regulations. This data may need to be presented during audits or evaluations by governing bodies.

What Tools Can I Use to Perform Post-Training Assessment?

Various tools integrate seamlessly with learning management systems and can be effectively used for post-training assessments:

- Learning Management Systems (LMS): Platforms specifically designed for healthcare training can provide specialised types of assessments and even tailor-made training modules.

- Survey Tools: Software like SurveyMonkey or Qualtrics can be used for post-training evaluation surveys, offering both preset and customisable question sets. However, these platforms likely sit outside of your existing learning management systems (without a direct reference to you existing learning data), and are not specific to healthcare requirements.

- Analytics Software: Advanced analytics tools can offer deep insights into training effectiveness, going beyond simple survey responses to provide actionable data. Similar to the above mentioned survey tools, these could prove cumbersome as they sit outside of your existing learning management platforms

Need an LMS with comprehensive training assessment support?

Contact Ausmed today and see how we can support your organisation!

Conclusion

Post-training assessments are a crucial component of evaluation training programs, as they help educators assess if new knowledge and skills are successfully transferred from the learning environment to real-world practice. Given the complexity of achieving sustained behavioural change in healthcare settings, these assessments need to align with the desired learning objectives and be structured and administered carefully to obtain correct data.

By leveraging learning analytics, L&D teams and educators can better articulate and demonstrate how education and training initiatives support organisational goals and enhance the quality of care.

Additional Resources

References

- What is assessment for Learning. (2021). Studies in Educational Evaluation, Volume 37, Issue 1, Pages 3-14.

- The New World Kirkpatrick Model

Author

Zoe Youl

Zoe Youl is a Critical Care Registered Nurse with over ten years of experience at Ausmed, currently as Head of Community. With expertise in critical care nursing, clinical governance, education and nursing professional development, she has built an in-depth understanding of the educational and regulatory needs of the Australian healthcare sector.

As the Accredited Provider Program Director (AP-PD) of the Ausmed Education Learning Centre, she maintains and applies accreditation frameworks in software and education. In 2024, Zoe lead the Ausmed Education Learning Centre to achieve Accreditation with Distinction for the fourth consecutive cycle with the American Nurses Credentialing Center’s (ANCC) Commission on Accreditation. The AELC is the only Australian provider of nursing continuing professional development to receive this prestigious recognition.

Zoe holds a Master's in Nursing Management and Leadership, and her professional interests focus on evaluating the translation of continuing professional development into practice to improve learner and healthcare consumer outcomes. From 2019-2022, Zoe provided an international perspective to the workgroup established to publish the fourth edition of Nursing Professional Development Scope & Standards of Practice. Zoe was invited to be a peer reviewer for the 6th edition of the Core Curriculum for Nursing Professional Development.